Learnings from building Agentic Workflows for Science

Over the last year at DSV, we've developed Elman, an LLM co-pilot designed to enhance the quality, speed, and impact of scientific R&D. Used by hundreds of scientists and inventors, Elman has improved our accuracy, productivity, and research efficiency, cutting down tasks that used to take weeks to just days.

Elman uses multiple LLM sub-agents to deploy methods for scientific venture creation, like automatic literature review, approach generation, freedom to operate analysis, state-of-the-art search, talent search, and techno-economic modelling, as well as autonomously handle tools, APIs, and code for more complex data analysis. We are now building Elman’s capabilities in targeted reasoning and goal setting, continuous learning, and long-term planning.

In this post, I want to share some of my learnings, focusing on the scope and potential of agentic workflows in science. Agentic workflows, characterised by their ability to execute complex, goal-directed processes autonomously, depart from traditional scientific exploration methods: they do not only reason in complex, unstructured environments but are able to collaborate, adapt, and iterate within them.

Emerging agentic design patterns

In these agentic flows, the emphasis is not on precise task instructions but rather on enabling the agents to perform higher-order cognitive functions and abstractions like tool use, reasoning, planning and strategising with the right data. At a minimum, we are seeing and using the following modules in scientific LLM agents:

Perception modules allow agents to handle various data types, ranging from images and texts to complex datasets such as transcriptomics, genomics, patents, literature, or experimental data. Additionally, examples of embodied AI agents bring this functionality into the physical world. These agents can interact with and manipulate their environments using sensors and actuators, allowing for uses like robotic control and lab navigation.

Interaction modules. AI agents' use of APIs, search engines, and knowledge databases aids scientific discovery. Notable examples include ChemCrow, which uses chemical analysis tools and search engines, and SayCan, showcasing how an AI can control a robot to complete tasks, integrating machine learning models, knowledge databases, robotic machinery, and APIs.

Reasoning modules are essential for an agent’s ability to execute and think through contexts, generate hypotheses or solve problems. These modules help the agent formulate and refine scientific inquiries and experiments. This area of agent design has gotten a lot of attention, leading to the development of a range of techniques. Agents can use direct reasoning, like chain-of-thought reasoning and multi-path reasoning, which can involve generating variations of chains, trees or graphs of thought. We are also seeing approaches that include reasoning with feedback, which involves refining the agent’s reasoning process by incorporating feedback from the environment, experiments, execution errors, humans, or self-assessments (e.g. Voyager)

Planning modules are where agentic systems become intriguing yet are less explored. These modules are central to an agent’s ability to outline actions and navigate complex tasks. On one end of the spectrum, task decomposition techniques break down complex tasks into smaller, manageable sub-goals or adaptively reconfigure them as tasks evolve. Another approach involves multi-plan selection, which generates multiple potential action plans and selects the optimal one using algorithms like BFS, DFS, MCTS, or A* search. This allows for comparing different strategies to find the best course of action. Additionally, planner-aided approaches use symbolic and neural systems to convert tasks into actionable strategies, while reflection and refinement techniques iteratively enhance plans by identifying and correcting errors. Lastly, memory-augmented planning uses RAG-based memory and the agent's historical experiences to enhance planning capabilities by drawing on relevant past insights.

Lastly, memory modules equip agents with the ability to store, manage, and retrieve information efficiently, supporting decision-making and tracking interactions across time. Long-term memory provides a knowledge store, including internally coded data and externally sourced material. Short-term memory includes techniques for temporary information storage, such as in-context learning and embeddings.

The path towards optimal agentic workflow design in science is marked by an ongoing exploration of potential frameworks and architectures and their tradeoffs. For example, multi-plan generation and selection require computational resources and might still not guarantee optimal outcomes due to unpredictability in real-world conditions or a general lack of verifiability. Or advanced reasoning modules may lead to increased complexity in debugging and maintaining the agentic system while still missing the nuances of scientific reasoning. While there is some evidence that more agents are all you need, this introduces new trade-offs, primarily coordination and consistency among the agents. In the near future, I expect to see more frameworks higher up the stack that help with optimal agentic workflow design.

The unbounded value of agentic workflows

Evaluating the potential and utility of agentic workflows is crucial, as we are just beginning to understand their capabilities. Traditionally, benchmarks in AI have compared different versions or iterations within the same model architectures, like GPT-3.5 versus GPT-4.

However, the integration of GPT-3.5 with agentic workflows illustrates superior performance in coding tasks, even against more advanced models, suggesting that traditional productivity metrics based on speed are becoming outdated.

From Andrew’s NG’s talk on “What's next for AI agentic workflows”

Instead, the focus should shift towards the quality of scientific task completion, especially alignment with expert-level performance, which includes criteria like scientific creativity, thoroughness, and problem-solving quality. I think related evaluation metrics should be developed to reflect the emerging capabilities of LLM agents e.g.

Synthetic agentic creativity might require new conceptualisations of creativity that take into account LLMs’ ability to do perceptual restructuring. This was proven to be the case with Alpha Zero, whereby the differences in human versus machine stem from their differences in how position-concept relationships are built. While humans develop prior biases over which concepts may be relevant in given chess positions, Alpha Zero has developed its own unconstrained understanding of concepts and chess positions, enabling flexibility and creativity in its strategies.

Associative gradients, i.e., the ease with which ideas can be associated with each other, also differ greatly between humans and LLMs. In human cognition, this gradient can be steep; some ideas are closely linked to one another and come to mind effortlessly, while others are more distantly connected and require more significant leaps in thought. Well-scaffolded agents can lessen this gradient even more.

Lastly, agentic LLMs can be unreasonably good combinatorial reasoners. Combinatorial creativity is a concept defining creativity as a process involving the recombination of existing elements into new and valuable configurations. So far, we see that appropriately scaffolded science agents can fairly effectively reason through initial probability (the likelihood of certain combinations occurring at the outset of the creative process), final utility (the efficacy or value of a combination once it has been fully realized and applies to its intended context); and prior knowledge (how well the potential utility of a combination is understood before its generation)— these components that are integral to combinatorial creativity in problem-solving.

Scaffolding capability, not features.

The evolution of design patterns mentioned above points to a shift in their design, from improving specific features toward scaffolding capabilities. Agentic workflows require architectures that capture each interaction's depth, intent, and nuance rather than rely on static feature building.

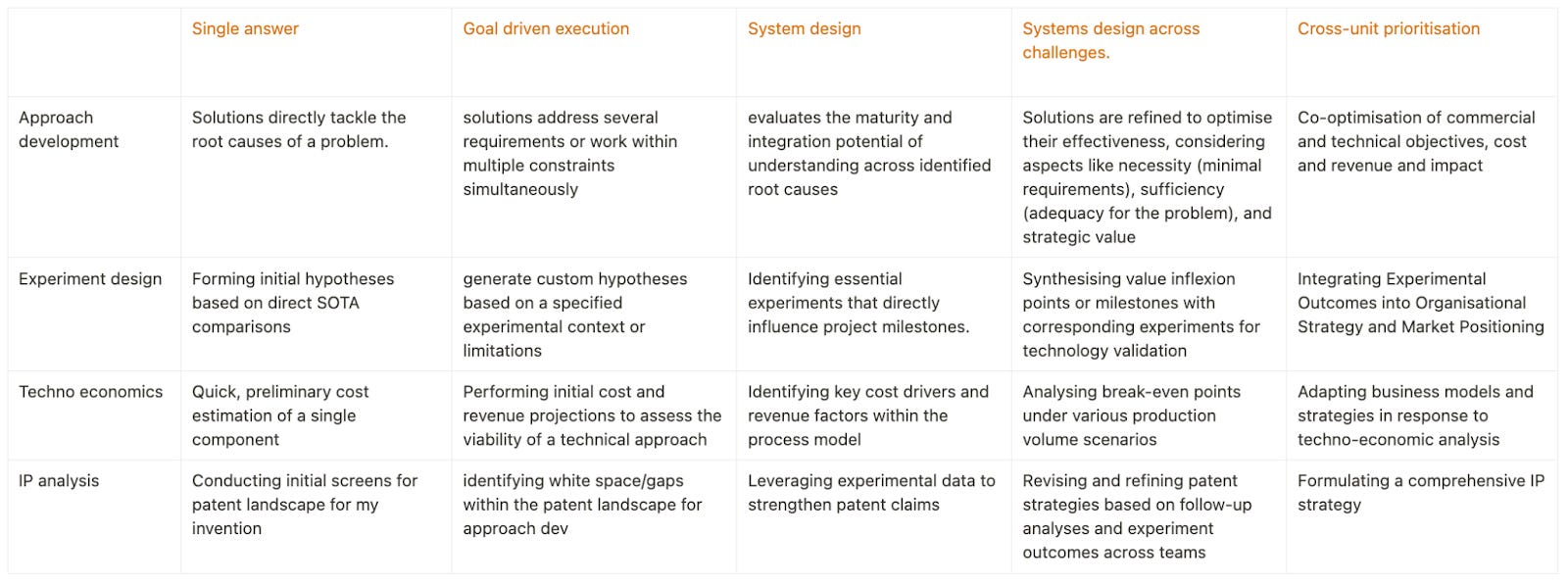

This is because a single capability must be applicable across multiple project stages and depths in ways that cannot be hardcoded or predetermined. Moreover, there is a myriad of entry and exit points, along with the numerous ways humans might interact with or modify an agent's path, which requires architectures that support dynamic and contextual responses.

For instance, the range of workflows encountered while building science companies at DSV. These examples highlight that an effective agentic workflow must not only navigate different levels of abstraction, ensuring good planning and optimisation across all dimensions of a project but also intelligently understand, plan and optimise for these different levels and user intents without explicitly hardcoded instructions.

The moats for agents are data, stateful heuristics and reasoning paths.

Current LLMs often struggle with creative scientific outputs, limited by the scope and nature of their training data. Typically, AI training in scientific fields relies on datasets composed mainly of final-stage scientific publications, which omit negative results and lack depth in documenting the iterative and exploratory processes of scientific reasoning and decision-making.

Incorporating a broader array of scientific content into training datasets is crucial to enhance LLM-driven problem-solving. This includes research papers and patents, conference proceedings, technical reports, and historical books on invention, which cover various scientific fields.

Beyond explicit scientific data, effective AI also needs access to implicit, often undocumented knowledge such as heuristics, tacit knowledge and decision-making processes essential for inventive problem-solving, which are usually absent in traditional datasets for science. Currently, heuristic applications in AI are mostly confined to controlled environments; thus, developing a better understanding and adaptation of human heuristics is essential for creating more intuitive and effective agentic systems.

Escaping chatboxes and designing interfaces for understanding.

We need to get out of chats asap to accommodate the true affordances of scientific work, i.e. evolving from simple input/output interfaces to systems to Interfaces for Understanding. This requires attention to two areas at minimum: interaction roles and information representation.

First, scientists' role in agentic interactions will be transforming from basic command input to higher-order orchestrations, directing agentic operations while maintaining oversight. Chat interfaces fall short of supporting the full spectrum of scientific tasks that could benefit from AI, such as creating diagrams, performing calculations, or generating visualisations etc. There's a need for interfaces and contextual command palettes designed specifically for scientific workflows, as we have with coding co-pilots.

Alongside orchestrating how activities are conducted, we also need to think about how scientific information is represented and navigated. Emerging interfaces should enable a shared, structured view of scientific content that keeps its context intact across interactions, allowing information to be easily composed, transformed, and analysed. This would unlock the potential for things like "semantic zooming", empowering scientists to explore information across various depths more clearly and precisely as agents perform longer-term tasks.

From individual to collective: empowering teams through agentic workflows

Currently, there is painfully little work on collaborative LLMs for science. But science and engineering are team activities. The current landscape lacks collective LLMs designed for team-based settings. Providing mechanisms to reflect valuable insights to the team (which may look like RAG and sensemaking over your collective /team’s agentic interactions) would enable the multiplication of collective value beyond what individual members could achieve independently.

Moreover, incentivising improvements in collaborative scientific models remains largely untapped, presenting a fertile ground for new reward mechanisms. The development of LLMs in science heavily relies on individual engagements, with scientists paid by private corporations for their data contributions and expertise. This taps into a fraction of the potential model and agent improvement strategies.

A more holistic approach would look more like Midjourney for Science: public output by default, combining intrinsic rewards, such as recognition within the community, with extrinsic incentives, including financial compensation for ideas or IP generated. Such an approach could fundamentally shift the landscape of contribution and ownership in scientific LLMs, fostering a community that is simultaneously more collaborative and invested in the shared success of these models for the benefit of all scientists.

Rethinking the developmental potential of syncretic AI and Human Capabilities

I couldn't help but be impressed at the capabilities of LLMs that stretch beyond mere augmentation into the territory of invention. One recent instance where Claude 3 Opus curiously reinvented a quantum algorithm from a mere two prompts stands out as a testament to this. Examples like this are too many to count or add here.

However, integrating AI into the scientific process introduces several risks alongside their potential for accelerated invention. We are seeing those already from "Generative AI pollution" in scientific papers, the increased banality and over-reliance on recursive AI systems leading to a "knowledge collapse" phenomenon, whereby those agents naturally generate outputs towards the "centre" of the data distribution they are trained on, neglecting rare/tail perspectives.

Data insufficiency will still limit LLM agentic potential in science, with its effectiveness taking a hit in domains needing more comprehensive, high-quality data. Safety concerns and a deficit in domain-specific knowledge further compromise research validity (see latest open source CRISPR LLM; what can possibly go wrong?!). Moreover, AI's reliance on training data raises the risk of perpetuating misinformation, requiring verification against credible sources. The technology's limited grasp of the actual research environments and insufficient human feedback can result in unrealistic or unfeasible proposals.

We must be collectively careful about these challenges and provide more satisfying answers to this whole range of philosophical questions from using LLMs for science.

The trajectory of scientific agentic workflows is undeniably toward greater complexity, requiring advancements in how we train, architect, and interact with LLM agents. The path ahead is as promising as it’s challenging. Comparing this to the game of Go, where the introduction of AlphaGo led to a noticeable increase in creativity and the sudden development of new strategies by players, it raises the question: Could we see a similar boost in scientific innovation thanks to LLMs? Could, over time, LLM agents even tackle the productivity of science paradox and the increasing burden of knowledge?

While it may be premature to conclude, optimism guides our outlook. One thing is certain: scientific agents will question conventional limits of research and exploration and, importantly, our conceptualisations of them as mere tools towards collaborative partners in the creative process.